SeekrFlow at the edge

Build with SeekrFlow.

Build with SeekrFlow.

Deploy anywhere.

Deploy AI closer to your users—even in air-gapped and edge environments. Seekr’s edge solutions come preloaded with SeekrFlow, models, storage, and networking, so you can launch powerful AI workloads in a fraction of the time.

Ready to get started?

Self-contained and purpose-built for high-compute AI workflows

A complete AI software stack for rapid prototyping

Get started in hours with fine-tuning, inference, agentic AI workflows, and more. Seekr’s edge appliance comes with SeekrFlow built-in, offers access to PyTorch and LLaMA for advanced workloads, and runs on a foundation of Red Hat OpenShift and Red Hat Enterprise Linux AI for scalability.

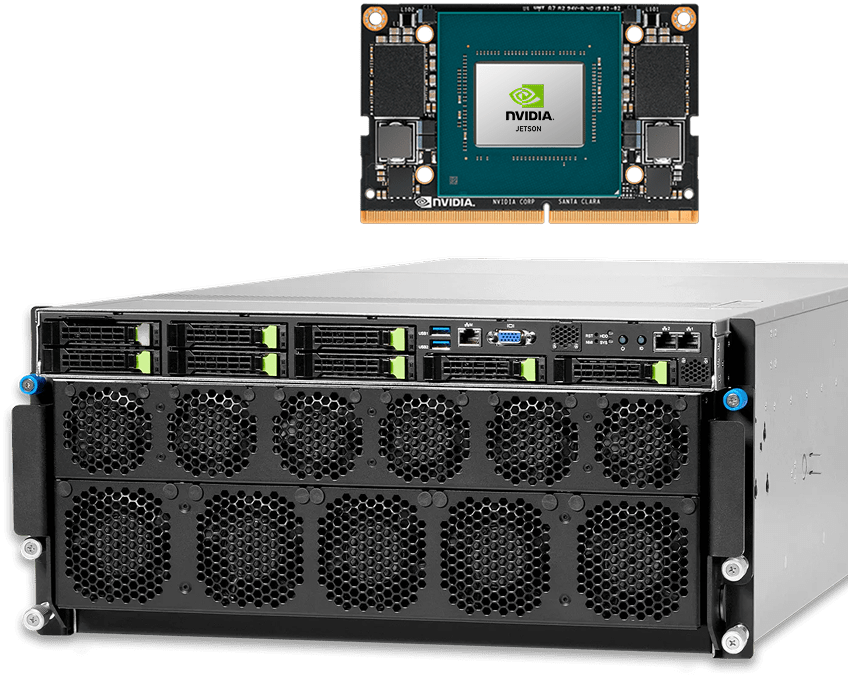

Customizable hardware, optimized for AI

Choose from trusted hardware providers including HP, Dell, Lenovo, and Supermicro—paired with high-performance chips like NVIDIA A100, H100, H200, or AMD’s MI300X to power your most demanding AI workloads with speed and efficiency.

Built to power high-impact apps and agents

- Large Language Models (LLMs)

- Fine-tuning, inferencing

- AI agent-based tasks

- Machine learning

- Deep learning

- Data analysis tasks