Dr. Poulis oversees the teams responsible for the research and development of the AI technology that powers Seekr products.

A key component of the Fourth Industrial Revolution, artificial intelligence (AI) has the potential to solve real business problems across industries with greater accuracy and consistency than any previous solution.

However, using AI to make decisions on behalf of humans can have a significant impact on people’s lives. Businesses and institutions for example, may deploy AI to determine who gets approved for a loan or a credit card, which patient receives which medical treatment, or which job applicant will be hired. With such great implications on society, it’s crucial for AI models to be built with trust and transparency at their core.

Building trust through bias mitigation

Trust is a component that needs to be integrated into each phase of the AI building process. Biases and noise are inherently present in all data on some level. And in the same way that humans do, models inherit the biases present in the information they’re trained on. Without establishing processes to mitigate these occurrences, they will manifest themselves in potentially unfair outcomes and negative experiences to the end users of the downstream applications.

The first step in building trustworthy AI models is to deeply understand the data. At Seekr, we have established a framework that governs the development of the AI that drives our products. Part of this framework is ensuring that we understand the distributions of the data that we use for model building and study inherent biases and their effect. We implement these safeguards by teaching the AI to be aware of biases, and then we vet the generated output to reduce biased responses, hallucinations, and errors even further.

Vetting data with a human-in-the-loop approach

This process originated from Seekr’s extensive background in content scoring, which has uniquely positioned us to build AI foundation models that are able to reason and to critically present all perspectives versus greedily choosing the most likely output token.

Additionally, we involve human experts on specific domains in the training, testing, and validation of the model. They help improve our models by:

- Annotating datasets

- Reviewing and correcting model results

- Auditing and analyzing model explanations

- Ensuring they can trust the outcomes the model produces

Their feedback and analysis are always used as an input back into the model. These signals are then reinforced so that the model better understands how a human expert truly thinks on that specific topic and can follow the same logic at inferencing.

After laying this foundation, the next step involves training the AI model on industry-specific information to make more accurate decisions.

Build and run trusted AI in one platform

Learn MoreTraining AI for domain expertise

Every domain we operate in requires its own level of domain expertise. For example, the AI model behind SeekrAlign needs to understand brand safety and suitability frameworks within the nuances of spoken language to accurately evaluate content for the advertising industry.

Therefore, our higher-level approach is to build AI models that are able to think like domain experts when making decisions, generating content, extracting insights, and analyzing information. We accomplish this by abstracting the domain expertise into principles that we then use to align our AI models. The result is a model that knows how to behave like a human expert in that domain.

Principled AI for real business value

Pre-trained foundation models are trained on massive datasets, and as the pre-fix “pre” insinuates, there is a lot more room for improvement on say, business-specific tasks. This is because pre-trained models may not understand the rules, regulations, and values of a specific business or domain.

For AI to drive real value, it needs to be trained in the business’s area of expertise, whether that be journalistic principles, brand safety, legal requirements, or food compliance regulations. Moreover, many businesses operate in domains that may be ruled by specific principles, regulations, and values which means they must deploy AI that respects those rules to produce more accurate and reliable outcomes.

This ability becomes especially crucial for industries that operate in domains where trust is mandatory, including financial services, healthcare, and government.

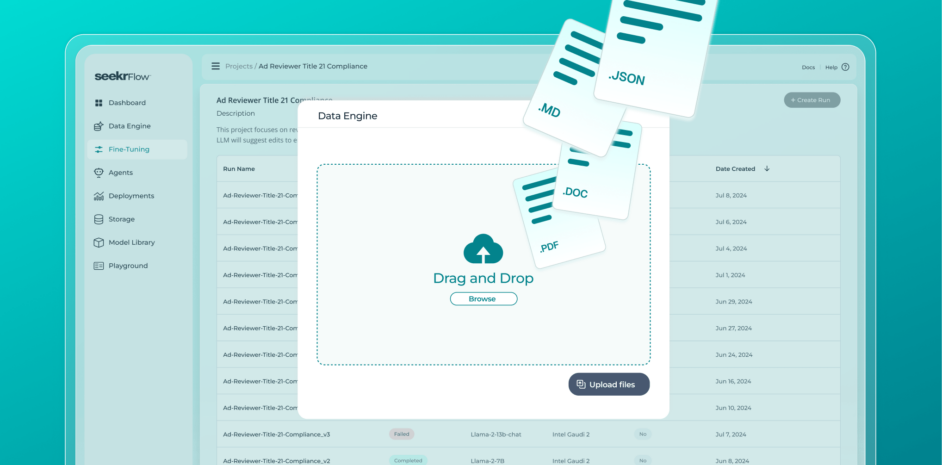

SeekrFlow™ enables this principle alignment step. Businesses can leverage our proprietary technology to train AI models on their predefined principles, company values, and industry regulations. As a result, the model will know how to respect their guidance and behave within the boundaries and regulations of the specific domain.

Trust in AI requires explainability

Another component of the framework that we have established at Seekr to govern responsible AI development is explainability. We need to be able to open the black box and lay out the decision-making process behind AI outcomes. This allows us to understand why the model made the decision, if we can trust it, and if that decision needs to be contested at its core to improve accuracy and reliability over time.

SeekrFlow is built with this level of explainability at its core, where the AI model’s decision-making process can be laid out, understood, and trusted by a human.