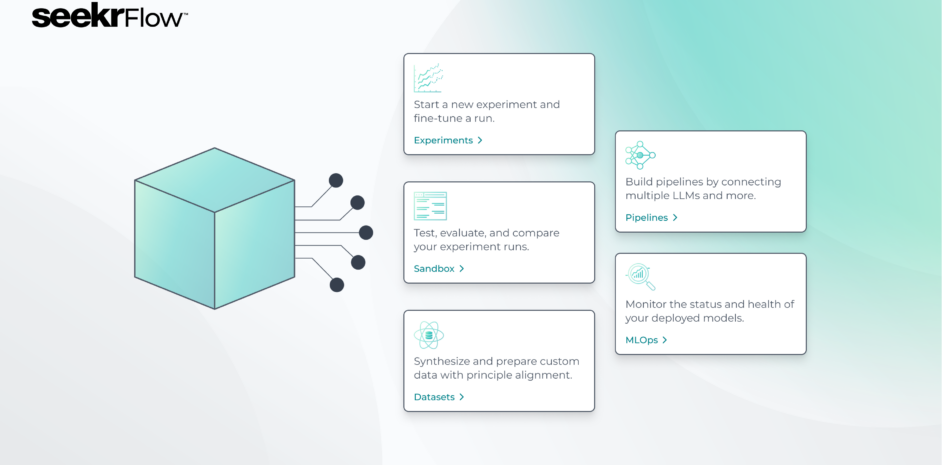

Today, we’re announcing the public release of SeekrFlow™, an AI development platform that empowers enterprises to develop, validate, scale, and run custom AI applications in one simple interface.

Overcoming problems in responsible AI adoption

Demand for enterprise AI is growing rapidly across industries, but enterprises face several challenges in AI adoption, including:

Cost: The true lifecycle cost of AI adoption is substantial, with significant investments required for hardware, software, human resources, and ongoing operational costs.

Complexity: Managing compute resources, data, and model deployment can overwhelm organizations and hinder successful AI implementation.

Accuracy: Enterprises need to consistently monitor and update their models to address biases and errors in training data to maintain accuracy.

Explainability: Enterprises often struggle to understand and optimize their models. Without this, organizations may face resistance from stakeholders and regulatory bodies, complicating their ability to build customer-facing applications.

SeekrFlow addresses these challenges through one intuitive interface that streamlines the entire large language model (LLM) development and deployment process.

A new standard for end-to-end AI development

With SeekrFlow, enterprises no longer need to piece together solutions across the AI development lifecycle. The platform integrates data preparation, fine-tuning, hosting, and inference to enable faster experimentation and adaptation of LLM behavior to meet the dynamic demands of successful AI adoption.

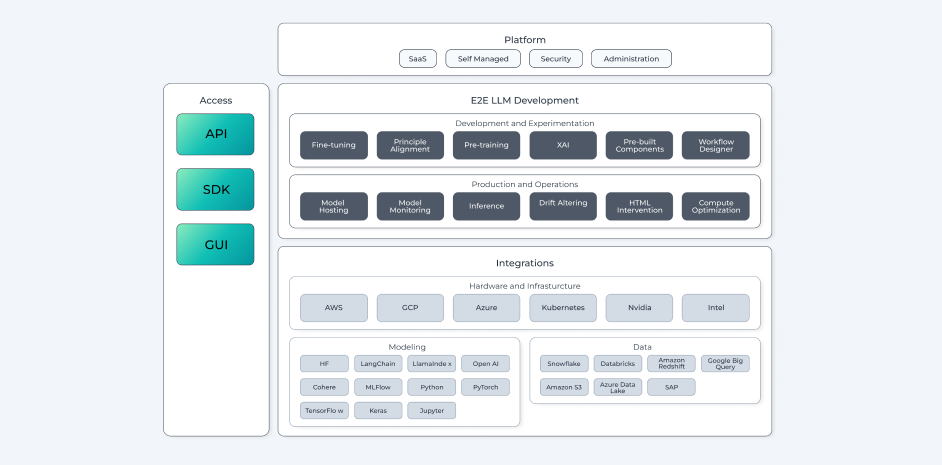

Manage AI workloads from start to finish

Developers can train and deploy LLMs with a single API call or leverage the software development kit (SDK) or graphical user interface (GUI). SeekrFlow’s compatibility with all hardware and cloud platforms optimizes LLM efficiency from training to production, resulting in a significant reduction in cost to enterprises.

Ensuring reliability and transparency, SeekrFlow features fine-tuning and explainability tools that enable users to both control LLM behavior during training and better understand how their model makes decisions.

Build industry-specific solutions with greater accuracy and efficiency

Controlling an LLM’s behavior is difficult. Traditional methods require a heavy upfront investment in large amounts of data and continuous adjustments. While newer techniques such as RAG can improve output predictability, they rely heavily on large amounts of labeled data, which can be just as expensive, time-consuming, and inflexible.

SeekrFlow has developed its own principle alignment agent to guide users through the entire data preparation and fine-tuning process. This unique technology allows developers of all skill levels to align AI to their enterprise’s desired principles, values, and industry regulations without the labor-intensive need to gather and process vast amounts of data.

With SeekrFlow’s AI agent, developers can also automatically generate synthetic data that explores the boundaries of their defined principles, reducing cost and time to market.

“Partnering with Seekr has enabled us to provide truly individualized and customized information, analysis, and recommendations to Haystack users. Seekr’s LLM produces fast, accurate, reliable and high-quality content through the Haystack chatbot and it is continually learning and adapting to the needs of Haystack users.”

Nikhil Sinha, CEO at OneValley

Build and run trusted AI in one platform

Learn HowBuilding trust through model-agnostic explainability tools

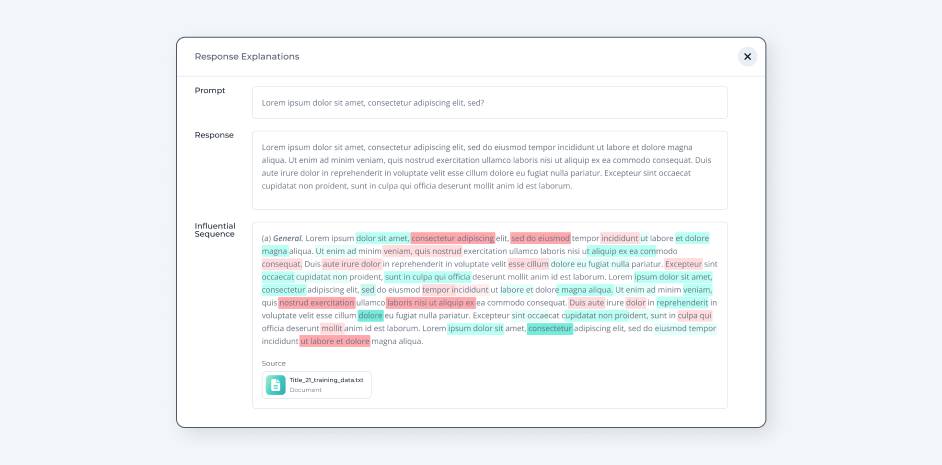

ML models can be opaque black boxes—users often don’t understand how the model arrived at its decision. This leads to debugging challenges, fairness concerns, and reduced trust in the model.

Beyond understanding, developers also need to be able to contest a model’s decisions. Without interpretability tools to facilitate this interaction, this capability is virtually impossible.

SeekrFlow’s proprietary explainability tools provide detailed insights into the internal working of complex models, regardless of their specific architecture. They reveal the intermediate reasoning steps and promote contestability to give enterprises confidence in their models’ decision-making.

Solving the cost of compute in collaboration with Intel

A growing barrier to entry in enterprise AI adoption is access to affordable and available compute resources to manage AI workloads.

Through our collaboration with Intel, enterprises can now access trustworthy and responsible LLMs at the best price-performance in the Intel Tiber Developer Cloud using the latest Gaudi AI accelerators and Xeon CPUs.

SeekrFlow is available on and optimized for Intel (Gaudi 2, GPU Max 1550, 4th Gen Xeon SR, upcoming Gaudi 3) and NVIDIA hardware. It is compatible with leading cloud and hardware providers.

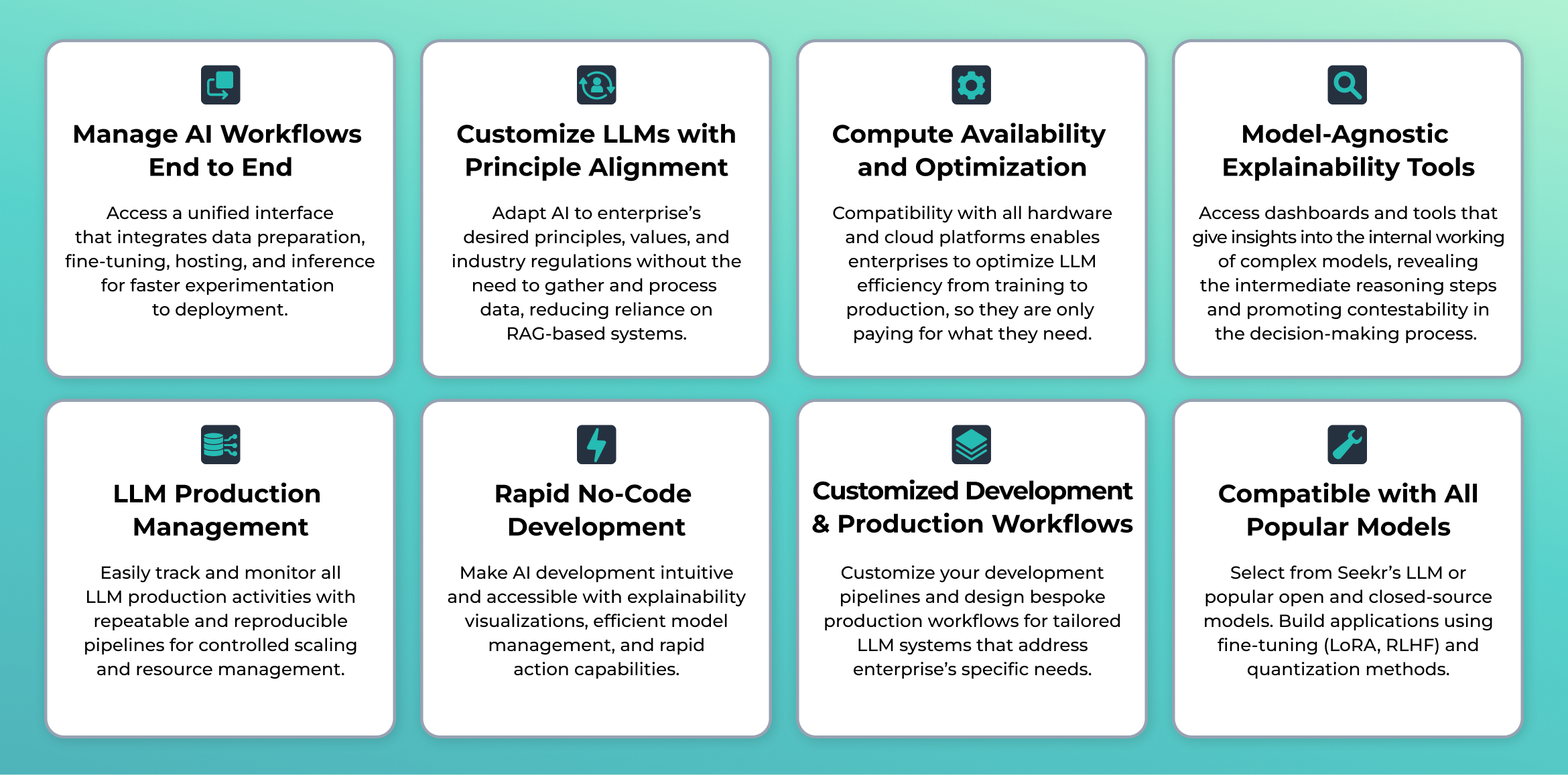

Core components of the SeekrFlow platform

Here’s a complete breakdown of the feature set SeekrFlow offers to launch AI in one place.

Start building with SeekrFlow

SeekrFlow’s one-of-one solution overcomes the biggest problems faced by enterprises face in adopting and realizing the ROI of generative AI. Through one intuitive end-to-end platform, businesses of all industries can start developing custom AI solutions to address their specific needs and accelerate business transformation.