The end-to-end

platform for trusted AI

SeekrFlow™ helps you train, validate, deploy, and scale trusted AI applications in one complete, secure platform. From seamless data prep and model training to secure deployment, we deliver the tools you need to accelerate your AI journey with confidence.

Build trust into every stage of the AI lifecycle

Fully customizable AI for your use case

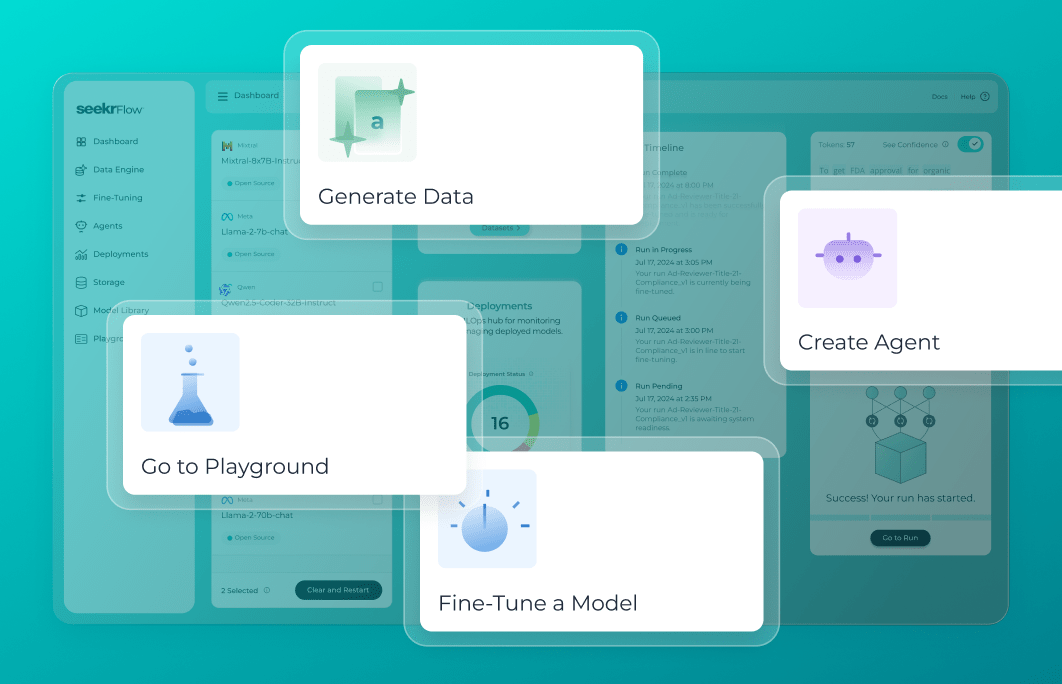

Build your AI application with open-source models for RAG and fine-tuning without black-box constraints. Our complete end-to-end platform gives you the flexibility to scale, ensuring your AI adapts to your enterprise needs with full configurability and control.

End-to-end AI workflow management made simple

Manage the entire AI lifecycle with a single API call, SDK, or intuitive no-code interface. SeekrFlow seamlessly integrates with any cloud or hardware, optimizing efficiency across data preparation, training, deployment, and inference—so you can simplify operations and only pay for what you need.

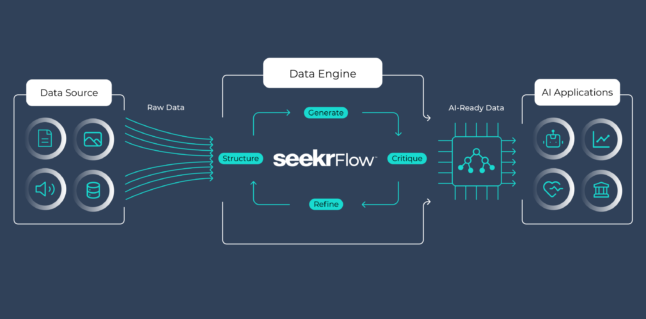

Train trusted models at a fraction of the cost and time

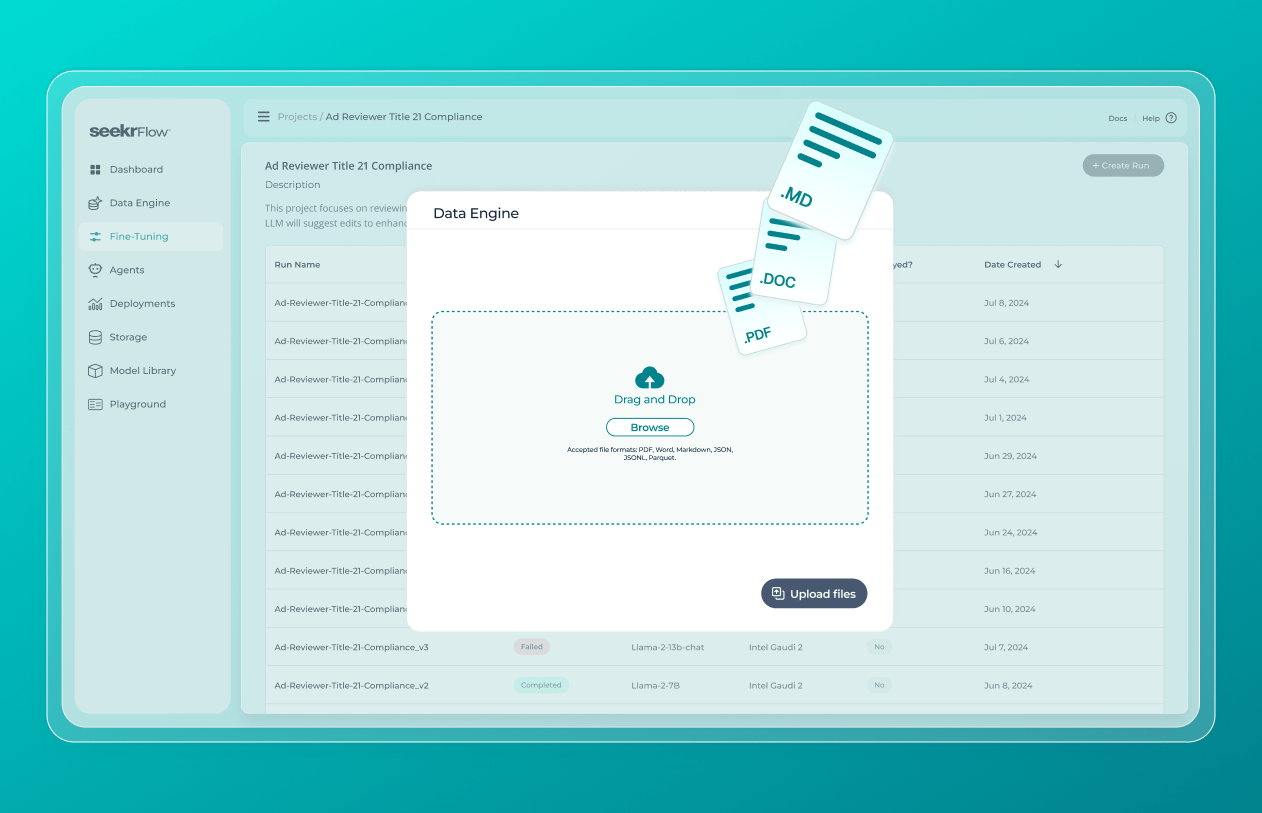

Effortlessly train AI models powered by your data. Our data preparation feature automates the ingestion, structuring, and optimization of unstructured enterprise data into a high-quality LLM-ready dataset for fine-tuning and retrieval—enhancing model accuracy by 3x and relevance by 6x.

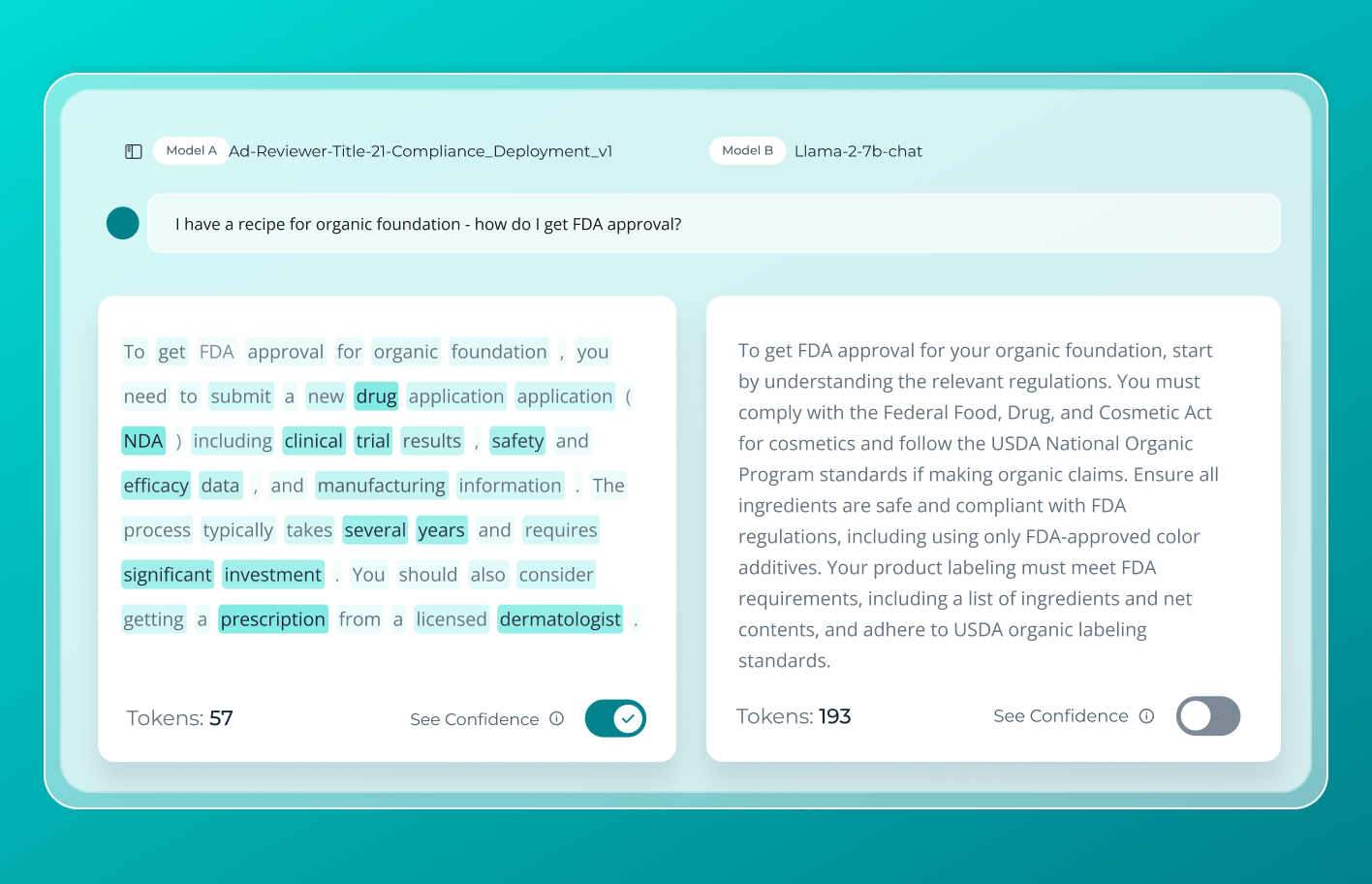

Validate and contest model accuracy with confidence

Leverage rich explainability tools and human-in-the-loop oversight to validate model accuracy. Token-level confidence scores, side-by-side prompt comparisons, and input parameters offer the control and transparency you need to launch AI applications with confidence.

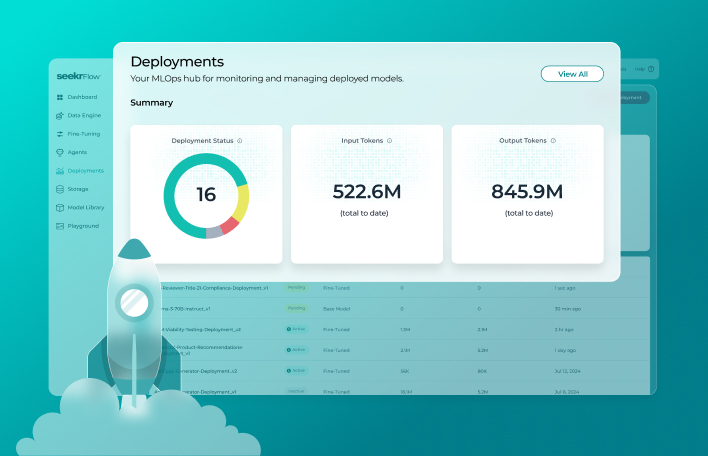

Fast, reliable deployment with continuous monitoring

Manually deploying machine learning models is time-consuming and error prone. Simplify the process with our five-click deployment and real-time production monitoring, ensuring reliable performance and robust tracking at every stage of the lifecycle.

Solve enterprise challenges with custom apps and agents

Deploy Seekr AI anywhere

AI as a Service

Cloud service providers

On-premise

At the edge

Build in the Foundry. Deploy securely at the edge.

Start using SeekrFlow within hours, without configuring infrastructure. The Seekr Edge Appliance is a pre-configured, all-in-one solution designed for rapid deployment of AI workloads in air-gapped environments and standalone data centers. Access GPUs and AI accelerators not in the cloud, avoid costly data movement, and support low-latency AI applications with ease.