Key takeaways:

- In ‘black box’ AI systems, developers can’t explain the decision-making process behind model results.

- Explainable AI (XAI) is a set of techniques designed to overcome the black box problem by helping users understand how AI models make decisions.

- Explainability correlates with positive ROI. Companies that follow best practices in explainability are seeing the biggest returns from AI.

- Explainable AI isn’t just a nice-to-have—it’s now a requirement for enterprises. Regulatory organizations are developing legal frameworks to ensure transparency, fairness, and accountability in AI systems.

- XAI becomes especially critical in highly regulated industries like government, healthcare, and finance where automated decision-making can have a significant impact on people’s lives.

- The SeekrFlow™ enterprise AI platform provides explainability tools that help developers understand, contest, and improve model outputs to deploy more reliable AI applications to the end user.

- Contact our team to learn how SeekrFlow can help enterprises deploy explainable AI applications that build trust with users and drive ROI.

What is explainable AI?

Explainable AI (XAI) refers to a set of techniques designed to make the decision-making processes of AI models understandable to humans.

Unlike traditional software where logic is clearly defined in code, AI and ML models lack explicit logic. Their non-deterministic nature creates a black box, making it difficult to trace how an AI model arrived at a particular output, and the data it used to get there.

XAI aims to solve this problem by providing tools that enable users to understand and optimize model decision making. Instead of relying on a single method, XAI employs various techniques throughout the AI development lifecycle, from data transparency to prompt engineering and deployment, to bring clarity to model predictions and enhance their accuracy.

The link between explainability and ROI

Enterprises often struggle to understand and optimize models, causing internal resistance and hindering their ability to build customer-facing applications. Stanford University found the average score for foundation model transparency was only 37 out of 100. Black box AI models can quickly decrease user adoption and lead to financial losses.

In practice, XAI allows development teams to identify and correct errors, biases, and areas of underperformance in models to provide more reliable responses to users of the end application.

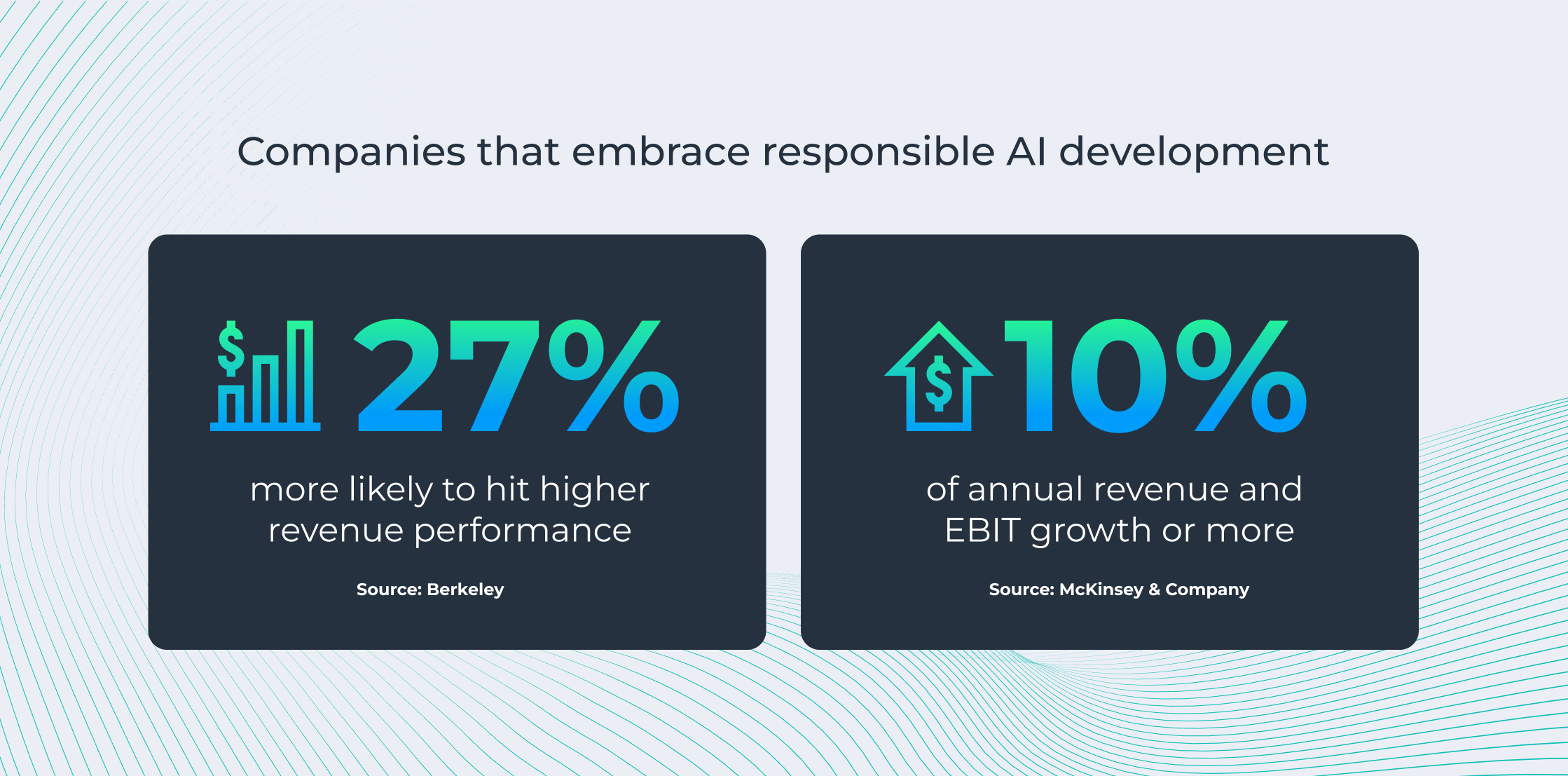

Companies that embrace responsible AI development are 27% more likely to hit higher revenue performance than counterparts that don’t and are more likely to see annual revenue grow by 10 percent or more. Investment in explainable AI also helps enterprises meet legal requirements, such as GDPR and CCPA, to maintain operational integrity and avoid compliance risks.

Why XAI matters most in heavily regulated industries

While explainable AI is essential in every industry, its impact is most significant in highly regulated industries including government, healthcare, and finance, where the AI model’s decisions can greatly impact people’s lives.

Government

In government, the ability to understand and contest a model’s decision-making is often required. Regulatory laws, like the Executive Order 14110 on Safe, Secure and Trustworthy Development and Use of Artificial Intelligence (Oct. 30, 2023), are arising to ensure government agencies deploy AI applications that benefit individuals and society.

For example, if the government deploys an AI model to make automated decisions about whether a veteran qualifies for disability benefits, this decision holds major long-term implications on the individual and society.

Finance

In finance, models may be used to determine an individual’s qualification for a home, auto, or business loan. Financial institutions need to be confident that the model can make accurate decisions to serve their customers and uphold regulatory compliance.

Healthcare

In healthcare, AI is used for diagnosis, treatment recommendations, and personalized medicine. XAI helps ensure patient safety, navigate ethical dilemmas, and enable healthcare professionals to understand and trust AI-driven diagnoses and treatment recommendations.

How explainability tools enhance model accuracy

Research in the field of XAI is growing rapidly. Our end-to-end AI development platform, SeekrFlow, provides rich explainability tools to help developers enhance model accuracy over time and drive more value in AI applications.

Data transparency and contestability

The black box AI problem often starts with the data. Contestability is one technique that allows development teams to reduce errors and biases and improve accuracy in the data engineering pipeline.

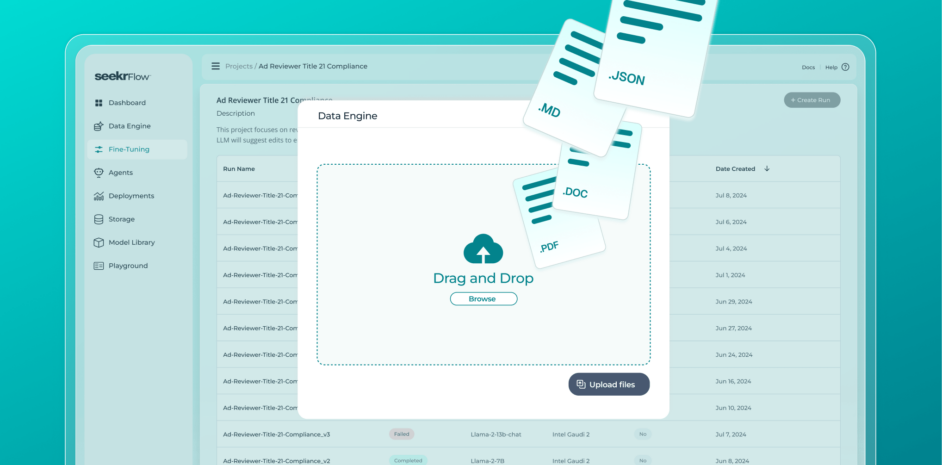

With SeekrFlow’s Principle Alignment feature, developers and data scientists can upload high-level domain-specific principles that they want a model to adhere to, like an FDA compliance document, then leverage an autonomous workflow to generate quality, structured training data for fine-tuning.

Through transparent data documentation, the development team can review the created data and trace the most influential portions of text or the intermediate graphical representation that led to specific answers. They can then make edits to improve the clarity and accuracy of the data before fine-tuning commences.

This workflow provides transparency and traceability at the document and data level, allowing users to see exactly how the system arrived at its outputs—ensuring the fine-tuned model accurately reflects the user’s intent and the true content of their documents.

Validate the accuracy of your models with confidence

Learn MoreSide-by-side model comparison

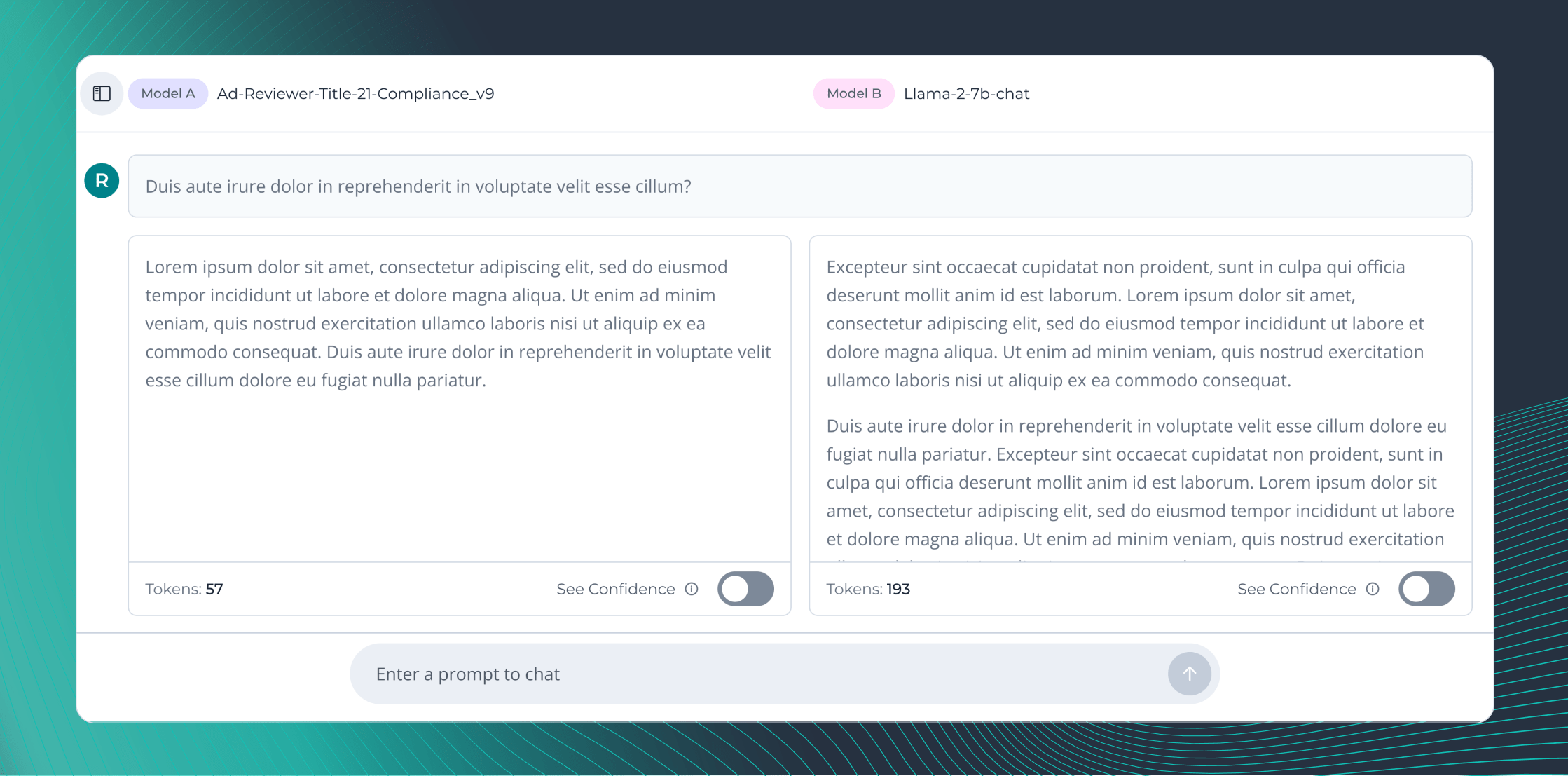

With SeekFlow’s sandbox tool, developers can compare responses from two different AI models side-by-side to determine which model is producing the most accurate outputs for their use case.

They can submit text prompts to both models simultaneously and compare responses alongside token counts to streamline the model evaluation process.

Confidence scores

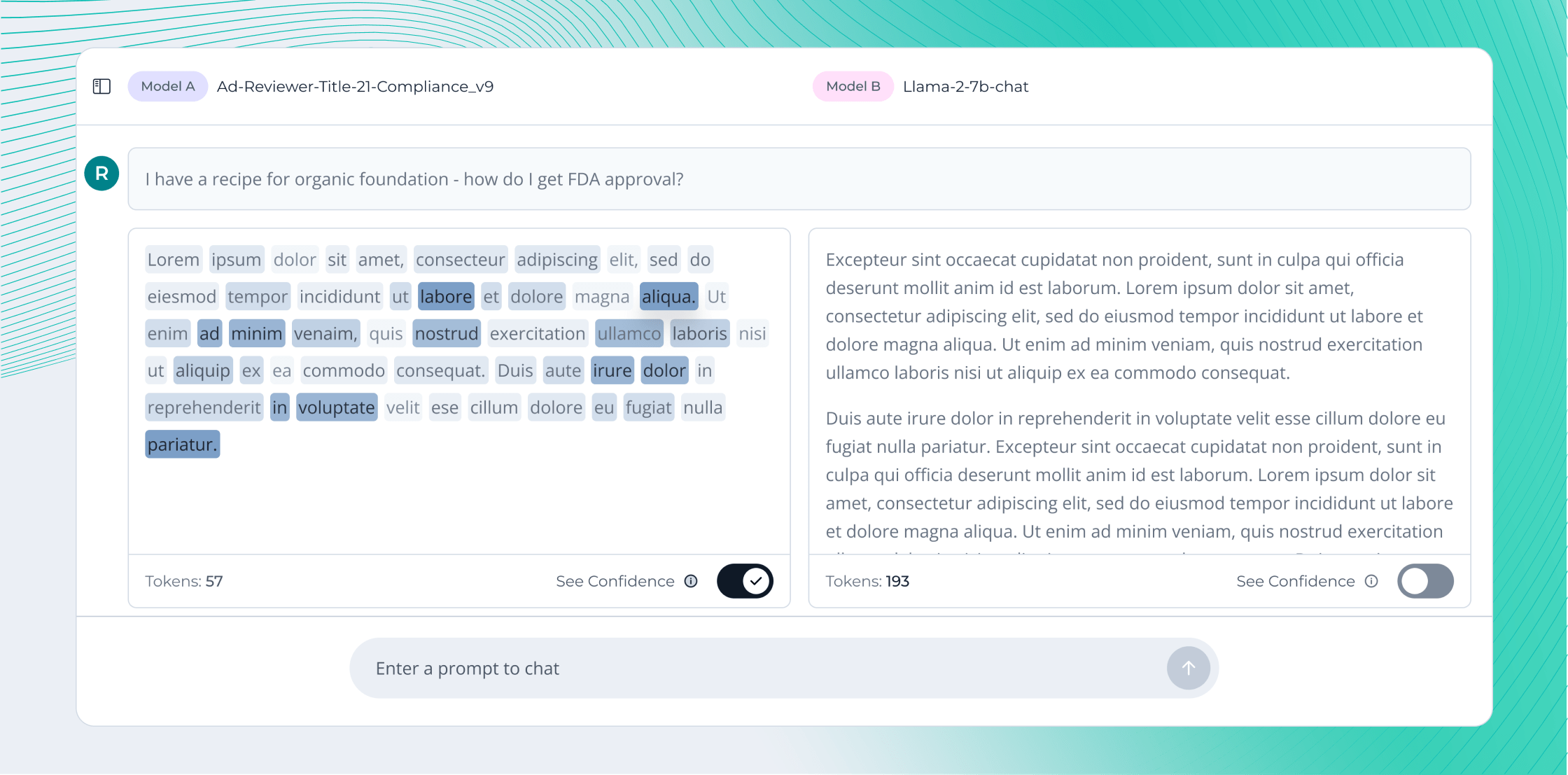

When an LLM generates a response, it uses patterns learned during training to predict the next token in the sequence. SeekrFlow helps users clearly understand the reliability of tokens through confidence scores.

With this feature, developers can evaluate confidence scores for each individual token, represented as a percentage from 0-100%, to determine how reliable each model prediction is. For example, a 30% confidence score indicates that the prediction is unreliable and likely a hallucination.

This helps developers identify and fix flaws in training data and choose the best performing model for their use case.

Delivering explainability to the end user

Explainability is just as important in deployment as it is in development. For end users to trust model outputs, they need to have confidence in the model’s decision-making process.

Developers can extend explainability features to the end user in several ways:

- Extend core developer explainability tools, such as confidence scores, to end users so they have transparency into the applications they are using.

- Generate user-friendly natural language explanations by feeding XAI metrics into LLMs. This provides end users with a clear understanding of the model’s decision-making process. For example, if a user applies to a credit card and is denied, the model can provide a detailed written explanation of factors that led to the decision, including the user’s credit score, income, payment history, and the financial institution’s eligibility criteria.

Conclusion: explainability drives AI responsibility and ROI for enterprises

Explainability isn’t just a compliance issue—it’s an essential tool for enterprises to operate responsibly and maximize ROI. Utilizing the latest explainability techniques throughout AI development, enterprises can optimize model performance, meet regulatory requirements, and enhance user adoption to increase AI value for their organization.

SeekrFlow simplifies this process by providing data transparency and contestability, side-by-side model comparisons, and token-level confidence scores in one end-to-end development platform.