Cheap, Fast, or Trusted? The High-Stakes Balancing Act in the Race for AI Dominance

February 5, 2025

It’s no surprise that the emergence of DeepSeek has captured our attention. After all, DeepSeek has told us that powerful, low-cost GenAI is possible, and promises of big savings always catch the eyes of technology, business, and government communities. While we still do not know exactly how DeepSeek generated their cost savings, we can at least welcome these claims as a worthy challenge. That’s the good news.

Here’s the bad news: DeepSeek raises serious concerns about just how much trust and transparency AI requires before it’s deemed useable and useful. Even if DeepSeek is not the Chinese Trojan Horse many suspect, it is still too risky and unproven to be trusted for mission-critical use cases in government and regulated industries like finance, education, energy, pharmaceuticals, and transportation.

The lure of cheap AI at the expense of trust

While the allure of cheaper AI is understandable, it cannot come at the expense of security or transparency. No organization that values the integrity of its data, customers, intellectual property, or brand can afford the exposure to DeepSeek’s unknown risks and security vulnerabilities. Many news outlets have already raised alarms from across industries about potential security risks and biased results associated with DeepSeek. Where does the data it processes end up? Is it safe to assume that everything users upload to DeepSeek will end up on Chinese Communist Party (CCP) servers? These are serious implications to weigh.

Some, including OpenAI, have speculated that DeepSeek is designed to steal intellectual property and manipulate Americans. These allegations are consistent with CCP strategies and actions, and this makes DeepSeek’s origin story more important than its low-price tag. Non-secure GenAI can be a powerful tool for manipulation and geopolitical gain by any adversary, and the consequences of compromised data and manipulation are not hypothetical: from IP theft to cyberattacks targeting critical infrastructure or election tampering, the implications are real and dire. We should also not be naïve about how such data could be used as another stepping stone in adversarial learning of the US, its individuals, and organizations—especially when combined with freely provided data (e.g., TikTok) or compromised data (e.g., health records).

Buyer beware! As the team at Seekr says: AI should be used to inform, not influence.

Finally, even if DeepSeek costs nothing, there are simply too many issues to trust it even for the most benign of use cases, let alone mission critical use cases. Because these are the very use cases with the highest impact and return on investment (ROI), it’s critical for government agencies and regulated industries to hold firm—despite the pressure to save money—and face up to the risks of using DeepSeek. This applies to all new AI services being evaluated for any enterprise-level use cases.

What a difference trust makes

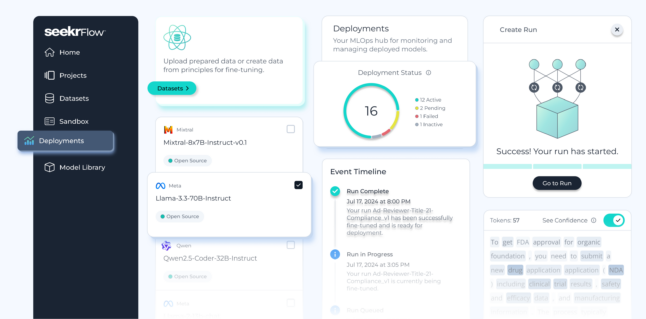

Seekr takes a different approach. The SeekrFlow platform is compatible with any commercial or open-source foundation model; however, Seekr only partners with AI companies whose technology it has vetted. By committing to high standards for security, privacy, and transparency, Seekr makes AI valuable for mission-critical use cases, powered by an organization’s own data. This approach to AI is not only transparent, it also produces more accurate results.

Conclusion

DeepSeek proves the market’s hunger for lower-cost AI, and we should all take note of all potential advances in new and emerging tech. But DeepSeek’s uncertain emergence also underscores an undeniable truth: without trust, AI is unusable where it matters most. The future of AI depends on a careful balance between innovation and risk management. And for now, the stakes are too high to ignore the potential dangers lurking under DeepSeek’s hood.